What is Integrated Gradients method?

Integrated Gradients (IG) [1] is a method proposed by Sundararajan et al. that is based on two axioms: Sensitivity and Implementation Invariance. Authors argue that those two axioms should be satisfied by all attribution methods. The definition of those two axioms is as follows:

Definition 1 (Axiom: Sensitivity) An attribution method satisfies Sensitivity if for every input and baseline that differ in one feature but have different predictions, then the differing feature should be given a non-zero attribution. If the function implemented by the deep network does not depend (mathematically) on some variable, then the attribution to that variable is always zero.

Definition 2 (Axiom: Implementation Invariance) Two networks are functionally equivalent if their outputs are equal for all inputs, despite having very different implementations. Attribution methods should satisfy Implementation Invariance, i.e., the attributions are always identical for two functionally equivalent networks.

The sensitivity axiom introduces the baseline which is an important part of the IG method. A baseline is defined as an absence of a feature in an input. This definition is confusing, especially when dealing with complex models, but the baseline could be interpreted as “input from the input space that produces a neutral prediction”. A baseline can be treated as an input to produce a counterfactual explanation by checking how the model behaves when moving from baseline to the original image.

The authors give the example of the baseline for an object recognition network, which is a black image. I personally think that a black image doesn’t represent an “absence of feature”, because this absence should be defined based on the manifold that represents data. This means that a black image could work as an absence of a feature in one network but may not work for a network trained on a different dataset, allowing a network to use black pixels in prediction.

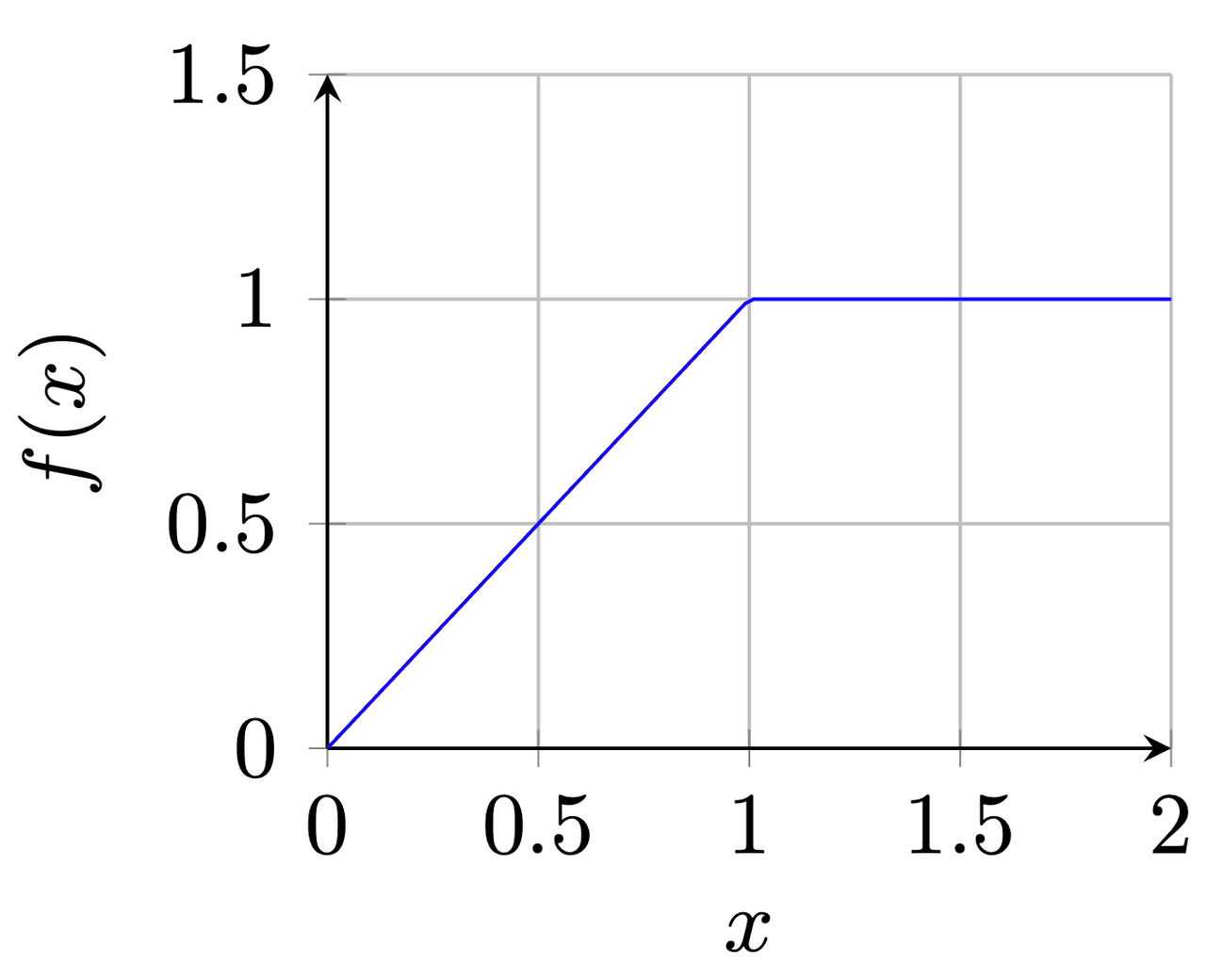

Authors argue that gradient-based methods are violating Sensitivity (Def. 1). As an example, we are presented with the case of simple function, (see Fig. 1) and the baseline being . When trying to generate attribution for , the functions’ output changes from 0 to 1 but after , it becomes flat and causes the gradient to equal zero. Obviously, attributes to the result, but because the function is flat at the input we are testing results in invalid attribution and breaks the Sensitivity. Sundararajan et al. think that breaking Sensitivity causes gradients to focus on irrelevant features.

How IG is calculated?

In IG definition we have a function representing our model, input ( is in because this is a general definition of IG and not CNN specific), and the baseline . We assume a straight line path between and and compute gradients long that path. The integrated gradient along dimension is defined as:

The original definition of Integrated Gradients is incalculable (because of the integral). Therefore, the implementation of the method uses approximated value by replacing the integral with the summation:

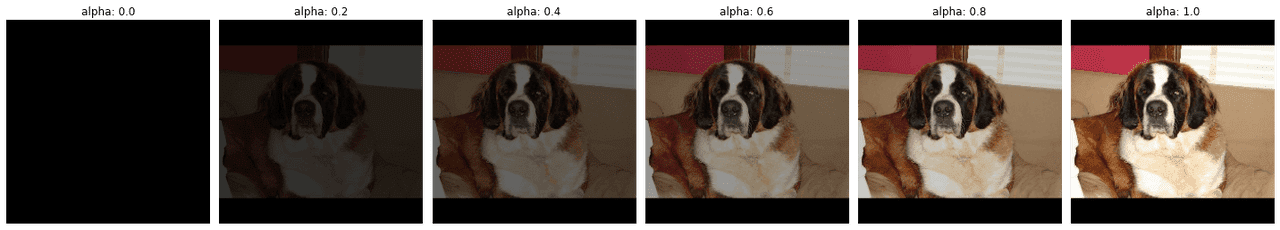

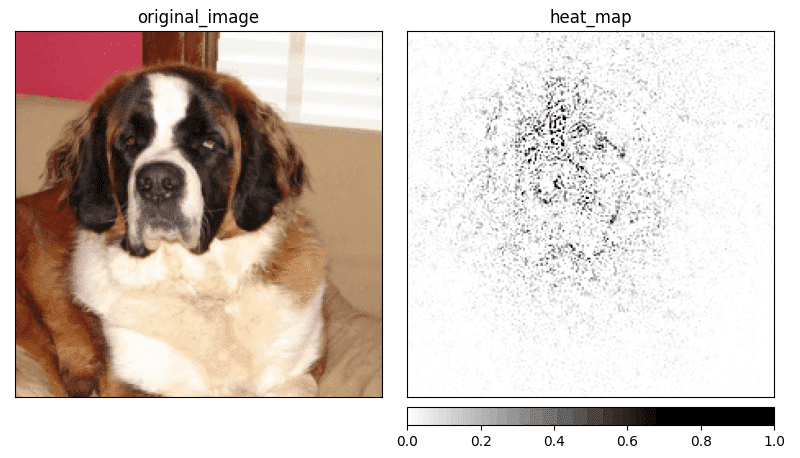

In the approximated calculation (Eq. 2), defines a number of interpolation steps. As an example, we can visualize the interpolations with equals five (see Fig. 2). In practice, the number of interpolation steps is usually between 20 and 300, but the mode value is equal to 50. The results of applying IG can be seen in Figure 3.

Baselines

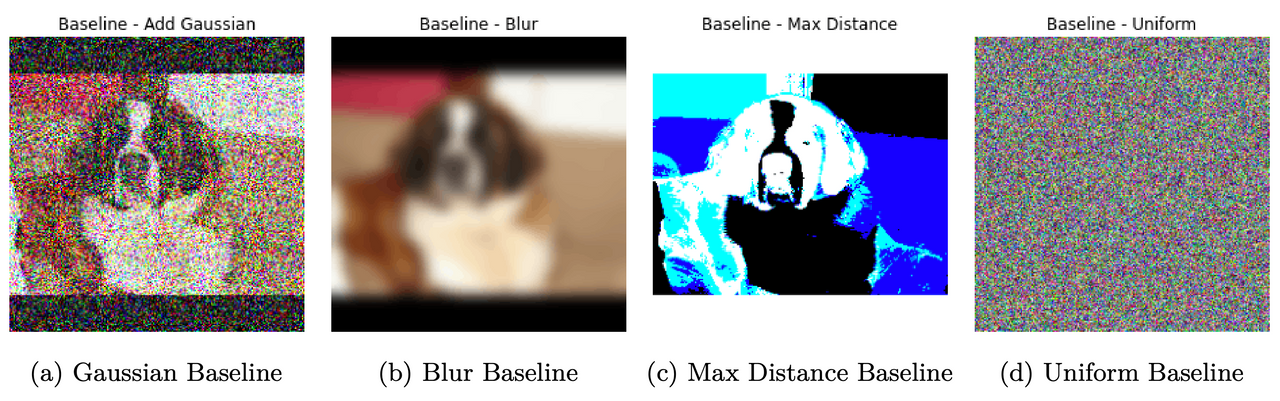

In recent years there was discussion about replacing the constant color baseline with an alternative. One of the first propositions was to add Gaussian noise to the original image (see Fig. 4a). Gaussian baseline was introduced by Smilkov et al. [2] and used a Gaussian distribution centered on the current image with a variance . This variance is the only parameter when tuning the method. Another baseline is called Blur baseline and uses a multi-dimensional gaussian filter (see Fig. 4b). The idea presented by Fong and Vedaldi [3] blurred version of the image is a domain-specific way to represent missing information and therefore be a valid baseline according to the original definition. Inspired by the work of Fong and Vedaldi, Sturmfels et al. [4] introduced another version of the baseline, which is based on the original image. This baseline is called the Maximum Distance baseline and creates a baseline by constructing an image with the largest value of the distance from the original image. The result of that can be seen in Figure 4c. The problem with the maximum distance is that it doesn’t represent the “absence of feature”. It contains the information about the original image, just in a different form. In the same work, Sturmfels et al. created another baseline called Uniform baseline. This time, the baseline doesn’t require an input image and uses only uniform distribution to generate a baseline (see Fig. 4d). The problem with selecting a baseline is not solved, and for any further experiments, the “black image” baseline is going to be used.

Further reading

I’ve decided to create a series of articles explaining the most important XAI methods currently used in practice. Here is the main article: XAI Methods - The Introduction

References:

- M. Sundararajan, A. Taly, Q. Yan. Axiomatic attribution for deep networks. International Conference on Machine Learning, pages 3319–3328. PMLR, 2017.

- D. Smilkov, N. Thorat, B. Kim, F. Viégas, M. Wattenberg. Smoothgrad: removing noise by adding noise. arXiv preprint arXiv:1706.03825, 2017.

- R. C. Fong, A. Vedaldi. Interpretable explanations of black boxes by meaningful perturbation. Proceedings of the IEEE International Conference on Computer Vision, pages 3429–3437, 2017.

- P. Sturmfels, S. Lundberg, S.-I. Lee. Visualizing the impact of feature attribution baselines. Distill, 5(1):e22, 2020.

- A. Khosla, N. Jayadevaprakash, B. Yao, L. Fei-Fei. Stanford dogs dataset. https://www.kaggle.com/jessicali9530/stanford-dogs-dataset, 2019. Accessed: 2021-10-01.