What is Guided GradCAM method?

Guided GradCAM is a method created by Selvaraju et al. [1] which combines both GBP and GradCAM (also created by the same authors). To explain the idea of Guided GradCAM, we have to split it into separate components.

Class Activation Mapping

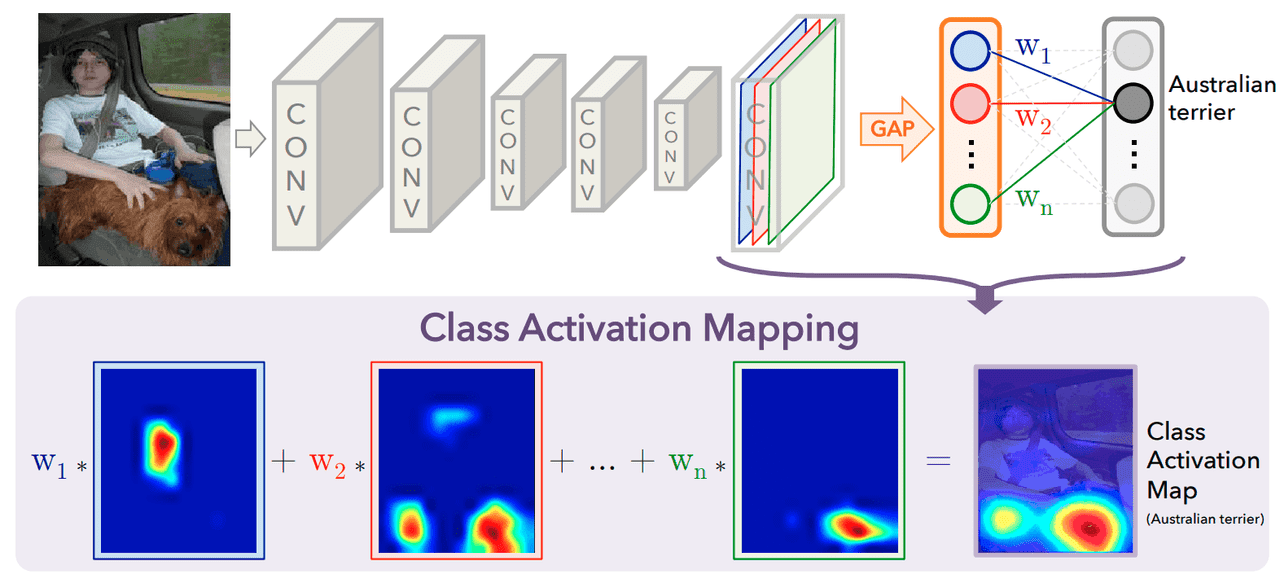

Class Activation Mapping (CAM) [2] is an approach to localizing regions responsible for a class prediction. This idea replaces the fully connected layers on the CNN with more convolutional layers and global average pooling (GAP) [3].

As shown in Figure 1, just before the Softmax layer, the GAP operation is performed. This computes the spatial average of the feature map from the last CNN layer for every unit. The weighted sum of these spatial averages is calculated to produce the final output, and the Softmax layer gives us a normalized output used for classification. Class activation maps are computed similarly to the output, but the process is reversed. As an input, we have a class , and the activation map for that class can be computed as:

Where is a weight corresponding to the unit and class , and is an activation of the last convolutional layer at the location for unit (visualized in Fig. 1, the bottom part of the image).

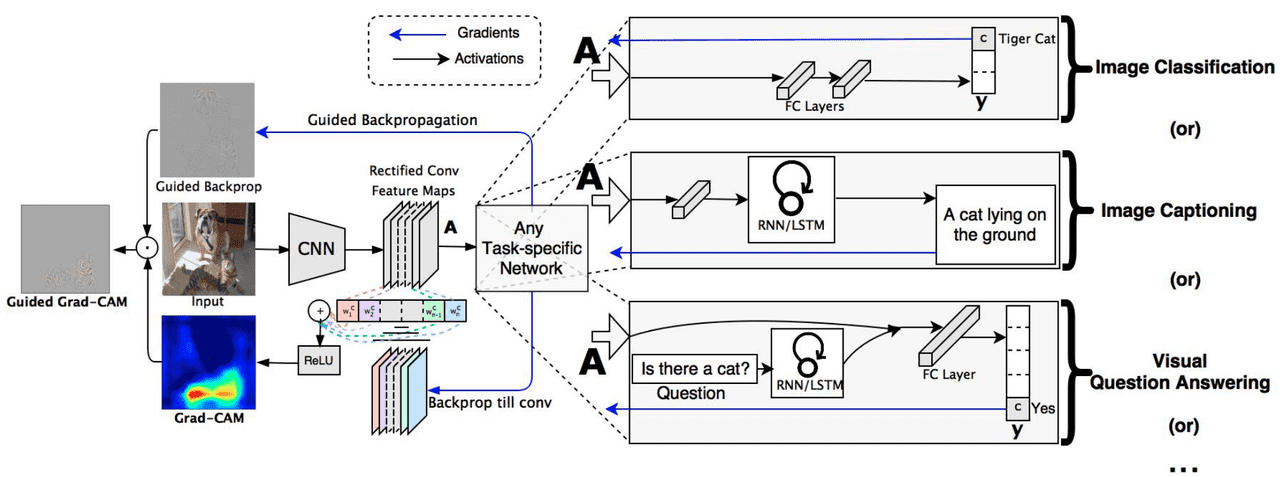

Selvaraju et al. argue that the CAM approach has a major drawback which is a requirement to modify the network and replace all fully-connected layers with convolutional layers. This makes CAM only applicable to certain tasks and reduces the performance of the network itself. They introduce the method that fixes all mentioned issues and calls it GradCAM. This method is implementation agnostic (within the CNNs) and can be applied without any modifications to the network. As the name says, GradCAM uses a gradient to generate CAMs. Unlike in the original CAM paper, GradCAM allows selecting the convolutional layer we use as a feature map against which we compute the gradient. The gradient is computed for a given score ( indicated a class) before the softmax layer. Then we use a feature map from the selected layer and calculate weight for every neuron (see eq. below) (similar to calculating weight for every unit in CAM).

defines a global average pooling over the width () and height () and is the gradient of score with respect to selected layers’ feature map. With all calculated, we can perform a linear combination of feature maps and the neuron importance weights :

The ReLU is used there to cutoff any non-positive values. The intuition behind using ReLU is that negative values more likely belong to other classes, which are also present in the image. The size of the map is the same as the size of the convolutional feature map used to compute the gradient. Values from the map then have to be mapped on the original image to visualize which region was relevant in predicting class .

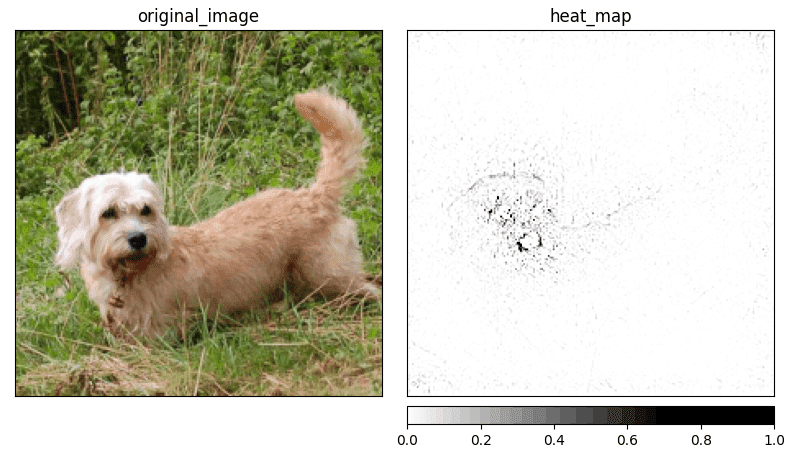

Guided GradCAM is a combination of GradCAM’s map and GBP attribution. To compute the Guided GradCAM value, we are performing the Hadamard product (also known as element-wise multiplication) of the attribution from GBP with a map from GradCAM (see Fig. 2). Combining GBP and GradCAM allows us to generate sharp attributions, as presented in Figure 3.

Further reading

I’ve decided to create a series of articles explaining the most important XAI methods currently used in practice. Here is the main article: XAI Methods - The Introduction

References:

- R. R. Selvaraju, M. Cogswell, A. Das, R. Vedantam, D. Parikh, D. Batra. Grad-cam: Visual explanations from deep networks via gradient-based localization. Proceedings of the IEEE international conference on computer vision, pages 618–626, 2017.

- B. Zhou, A. Khosla, A. Lapedriza, A. Oliva, A. Torralba. Learning deep features for discriminative localization. Proceedings of the IEEE conference on computer vision and pattern recognition, pages 2921–2929, 2016.

- M. Lin, Q. Chen, S. Yan. Network in network. arXiv preprint arXiv:1312.4400, 2013.

- A. Khosla, N. Jayadevaprakash, B. Yao, L. Fei-Fei. Stanford dogs dataset. https://www.kaggle.com/jessicali9530/stanford-dogs-dataset, 2019. Accessed: 2021-10-01.