What is Saliency and why the same is confusing?

Saliency [1] is one of the first attribution methods designed to visualize the input attribution of the Convolutional Network. Because the word saliency is often related to the whole approach to display input attribution called Saliency Map, this method is also known as Vanilla Gradient.

The idea of the Saliency method starts from the class visualization by finding image that maximizes score for a given class . It can be written formally as:

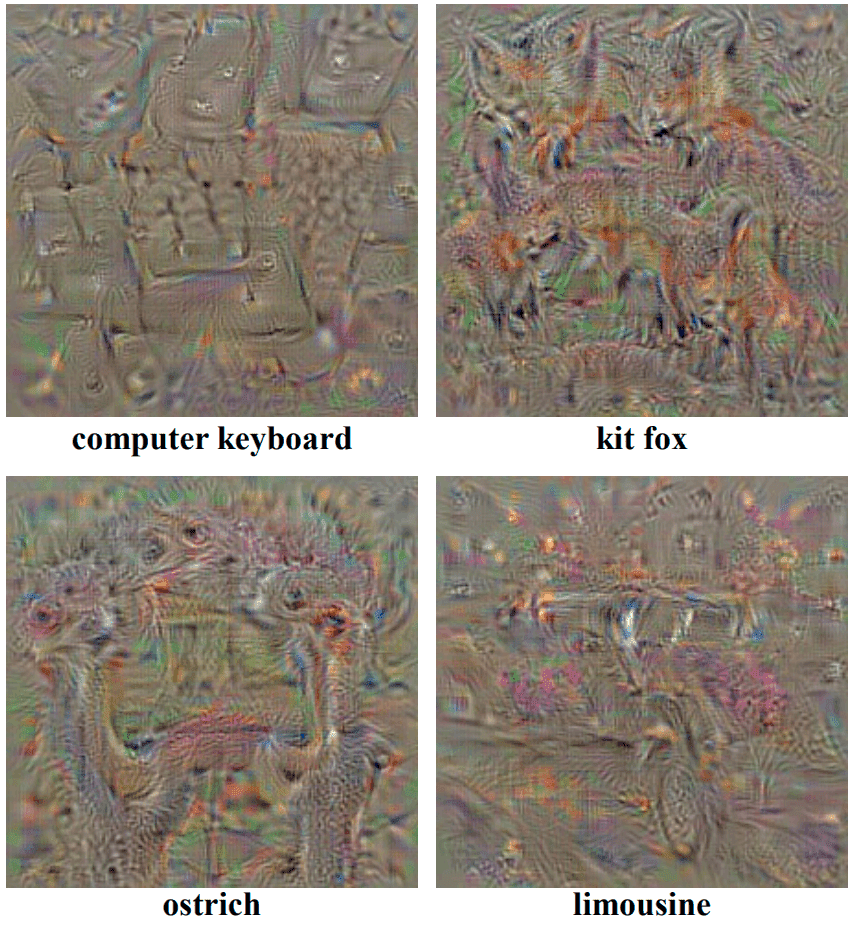

Where is a regularisation parameter. To find the value of , we can use the back-propagation method. Unlike in the standard learning process, we are going to back-propagate with respect to the input image, not the first convolution layer. This optimization allows us to produce images that visualize a particular class in our model (see Fig. 1).

From class visualization to Saliency

This idea can be extrapolated, and with minor modifications, we should be able to query for spatial support of class in a given image . To do this, we have to rank pixels of in relation to their importance in predicting score . Authors assume that we can approximate with a linear function in the neighborhood of with:

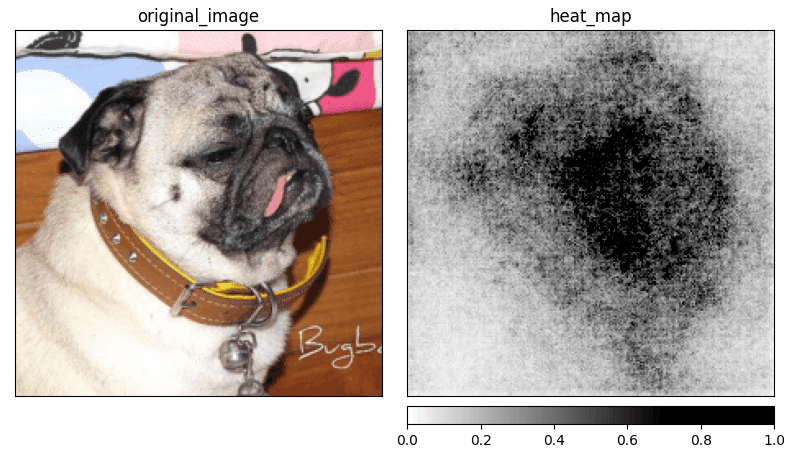

For a pair of input image and the class , we are able to compute saliency map (where and are the height and width of the input in pixels). All we have to do is to compute derivative and rearrange elements in the returned vector.

This method uses different approaches base on the number of channels in the input image . For grey-scale pixels (one color channel), we can rearrange the pixels to match the shape of the image. If the number of channels is greater than one, we are going to use the maximum value from each set of values related to the specified pixel.

where is a color channel of the pixel and is an index of the corresponding to the same pixel . With the obtained map, we can visualize pixel importance for the input image as shown in the Figure 2.

The original Saliency method produces a lot of additional noise but still gives us an idea of which part of the input image is relevant when predicting a specific class. This often causes a problem when the object on the image has a lot of details and the model is using most of them to make a prediction.

Further reading

I’ve decided to create a series of articles explaining the most important XAI methods currently used in practice. Here is the main article: XAI Methods - The Introduction

References:

- K. Simonyan, A. Vedaldi, A. Zisserman. Deep inside convolutional networks: Visualising image classification models and saliency maps, 2014.

- A. Khosla, N. Jayadevaprakash, B. Yao, L. Fei-Fei. Stanford dogs dataset. https://www.kaggle.com/jessicali9530/stanford-dogs-dataset, 2019. Accessed: 2021-10-01.