What is Deconvolution?

The idea of Deconvolution [2] comes from the work of Zeiler et al. [1] about Deconvolutional Networks (deconvnets}. Deconvnets are designed to work similar to convolutional networks but reverse (reversing pooling component, reversing filter component etc.), and they can be trained using an unsupervised approach. In a deconvolutional approach to explaining the model, we are not training a deconvnet but rather probe our CNN with it.

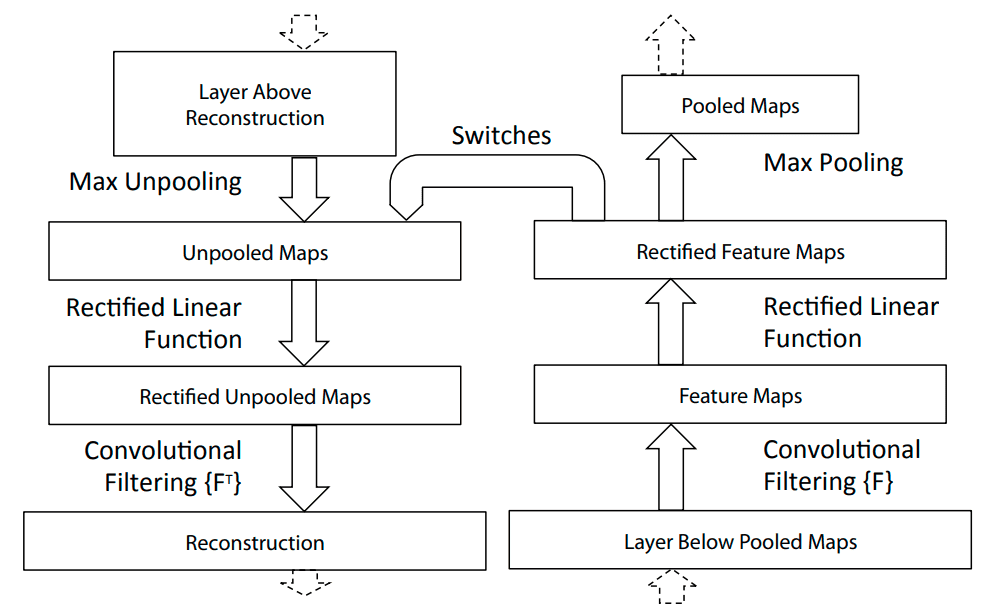

To reconstruct the activation on a specific layer, we are attaching deconv layers to corresponding CNN layers (see Fig. 1). Then an image is passed through the CNN, and the network computes the output. To examine a reconstruction for a given class , we have to set all activations except the one responsible for predicting class to zero. Then we can propagate through deconvnet layers and pass all the feature maps as inputs to corresponding layers.

To calculate the reconstruction, deconvnet layer has to be able to reverse operations performed by the CNN layers. Authors designed specific components to compote the reverse operations done by CNN layers:

Filtering

Filtering in the original CNN computes feature maps using learned filters. Reversing that operation requires the use of a transposed version of the same filters. Those transposed filters are then applied to the Rectified Unpooled Maps.

Rectification

Rectification uses the same ReLU non-linearity [4] to compute Rectified Unpooled Maps as it is used in CNN. It is simply just rectifying the values and propagate only non-negative ones to the filtering layer.

Unpooling

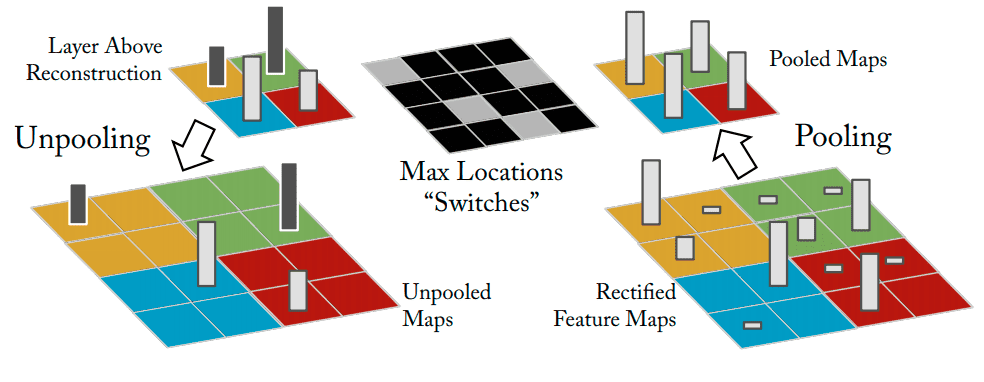

Unpooling corresponds to the Pooling Layer of CNN (see Fig. 2). The original max-pooling operation is non-invertible, but this approach uses additional variables called switch variables, which are responsible for remembering the locations of the maxima for each pooling region. The unpooling layer uses these variables to make a reconstruction into the same locations as when the pooling was calculated.

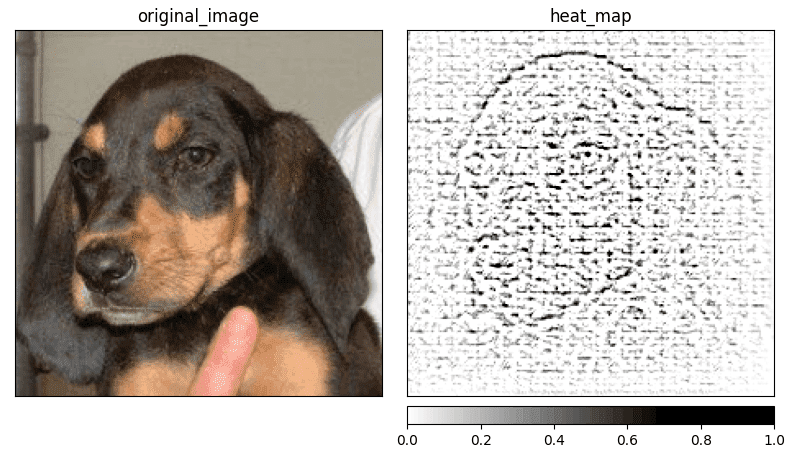

Propagation through the whole deconvnet gives us a representation of the features from the first layer of the original CNN (the last deconvnet layer corresponds to the first CNN layer). This approach causes the saliency map to feature some biases from the first convolutional layer and the representation looks like a localized edge detector (see Fig. 3). It usually works better when there is a clear distinction in the feature importance rather than similar values for the whole image.

Further reading

I’ve decided to create a series of articles explaining the most important XAI methods currently used in practice. Here is the main article: XAI Methods - The Introduction

References:

- M. D. Zeiler, G. W. Taylor, R. Fergus. Adaptive deconvolutional networks for mid and high level feature learning, 2011.

- D. Zeiler, R. Fergus. Visualizing and Understanding Convolutional Networks, 2013.

- A. Khosla, N. Jayadevaprakash, B. Yao, L. Fei-Fei. Stanford dogs dataset. https://www.kaggle.com/jessicali9530/stanford-dogs-dataset, 2019. Accessed: 2021-10-01.

- R. Hahnloser, R. Sarpeshkar, M. Mahowald, R. J. Douglas, S. Seung. Digital selection and analogue amplification coexist in a cortex-inspired silicon circuit, 2000.