Explainable Artificial Intelligence (XAI) is getting more and more popular every year. Also every year we have more methods that aim to explain how our models work. The only problem with most of those approaches is comparison.

We have two types of methods:

- Quantitative - base on some numeric value

- Qualitative - base on opinions/polls

Let me show you where the problem is:

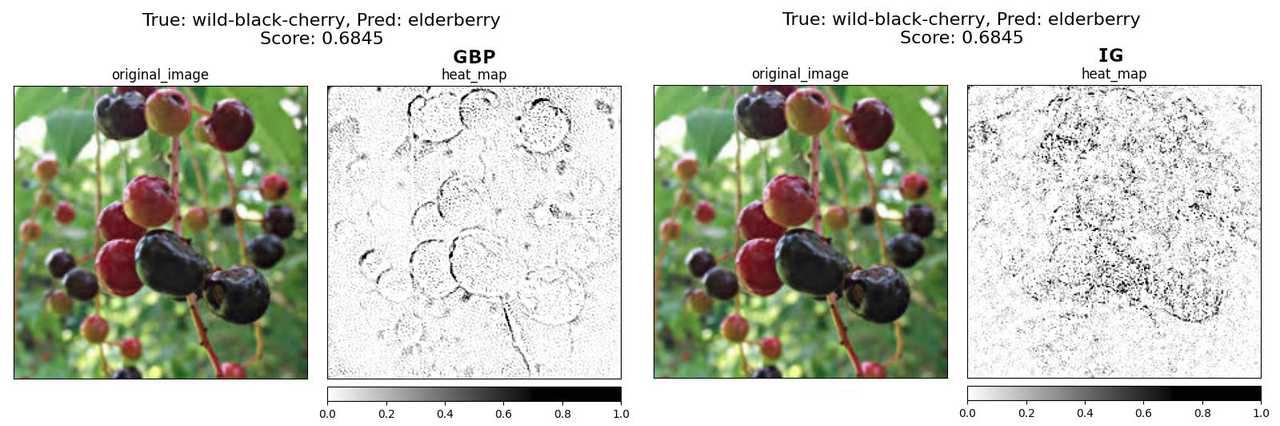

Here we have two explanations of the same prediction (done by ResNet18 model) using two methods, Guided Backpropagation (GPB) and Integrated Gradients (IG). As you can see, the predicted class is incorrect but two explanations why this should be elderberry are completely different. As someone who has no idea about the plants I would say that GPB explanation is better, and it probably is. We could ask more people and at the end gather all answers and decide which one is better. Before doing that let us jump to a different example.

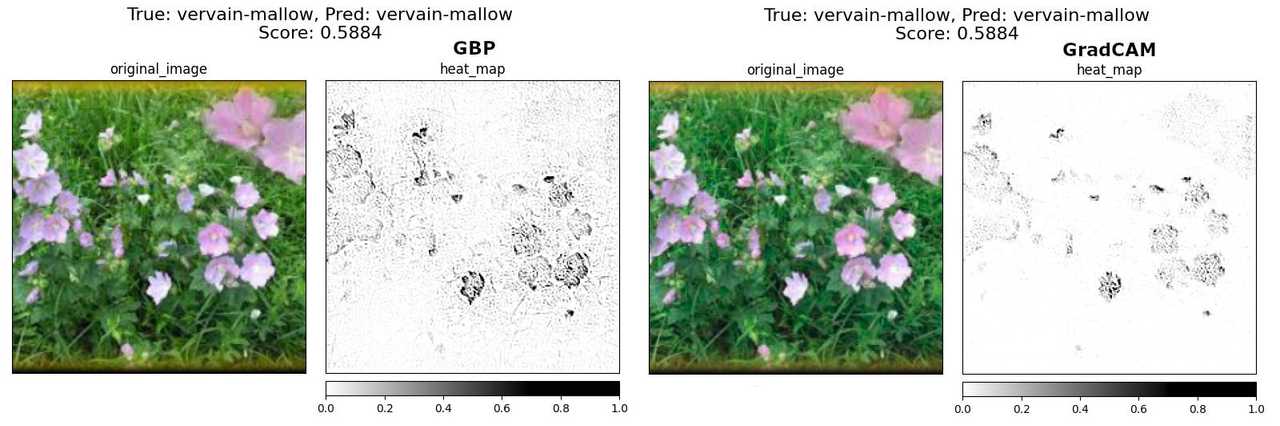

In this case, we’re looking at a different method called GradCAM. This example is not that obvious as the previous one. GradCAM’s version has less noise (more focus on flowers) but without an expert, we cannot be sure which one is better. We still could poll people and get some answers but is that answer going to be a valid one? Sure we could test 100 or even 1000 images and come up with an excellent idea on which method is better but that won’t scale up when our dataset has 10k images and we need to compare 5 different methods using 5 different models.

This is a problem with the Qualitative approach. We’re able to compare a handful of examples but that won’t give us any meaningful metric if we want to add another method to our comparison poll. Here is when Quantitative methods are coming. The idea of the quantitative method is to have some kind of metric which is replicable and allowing us to compare numeric values. In the paper On the (In)fidelity and Sensitivity for Explanations, Chih-Kuan Yeh and others are presenting two measures (called infidelity and sensitivity) which should be an objective evaluation of that kind of explanation (saliency map).

Sensitivity

Let’s start with the second one because it’s easier to explain. As the name says, this measurement tells us how “sensitive” the method is.

Where:

- is an explanation function

- is a black-box model

- is an input

- is called input neighborhood radius

Sensitivity is defined as a change in the explanation with a small perturbation of the input. To calculate SENS value we have to make those small perturbations and check how our attributions have changed in respect to the original attribution for unchanged input.

The original paper also refers to those “small perturbations” as “insignificant perturbations” and that wording is important because when measuring infidelity we’re also performing perturbations, but those perturbations are “significant perturbations”. It’s good to know that difference.

You might ask “What’s with that radius?” (r parameter). It is basically lower and upper bound of the uniform distribution . Now when we know the parameters here are the examples:

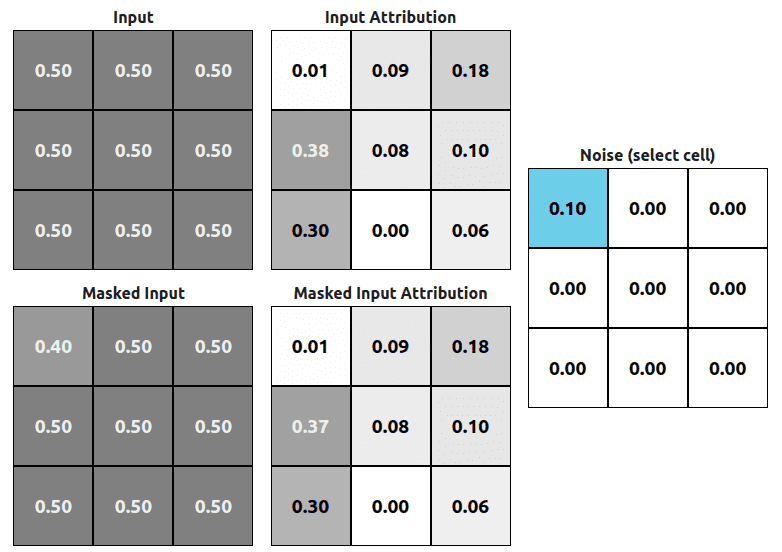

Fig 3. is made with the help of a simple model which operates on 3x3x1 images (grayscale). We’re subtracting 0.1 noise from the original input and calculating attributions base on the new masked input. We can see that attribution changes ((2,1) position on the Masked Input Attribution grid). This example produces a sensitivity value of ~0.01 (it’s a little more because other attributions also changed but that change is very small). There might be a case when the sensitivity value is going to be 0.

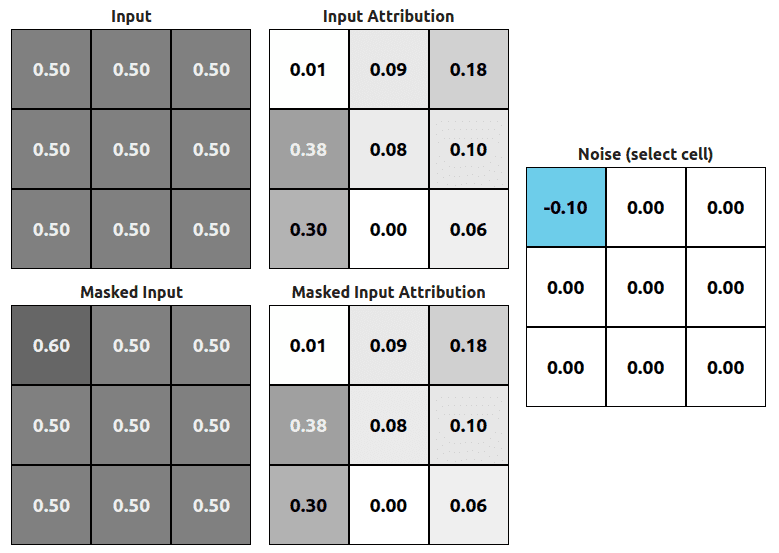

This example is fake (check it in the application below) but I did it just to show you when the attribution might be equal to 0. In this case (Fig. 4) we’ve also applied some perturbation (-0.1 on the (1,1) position) but the attribution of the new masked input stays the same. This is the only case when the sensitivity value would have a value of 0. IRL sensitivity value is not calculated base on one perturbation but rather 10 or more.

Infidelity

Infidelity is a little more complicated than sensitivity. The result is taking more values than just the attribution (also score and noise itself). The equation for infidelity looks like:

Where:

- is an explanation function

- is a black-box model

- is an input

- is a significant perturbation

This time we’re dealing with a significant perturbation. The paper discusses that perturbation a lot but in the end, we’re left with 2 main options:

- Noisy Baseline - just use Gaussian random vector

- Square Removal - remove random subsets of pixels

The first option is easier from an implementation and usage perspective. We just need to generate a vector (exactly it’s a tensor) with the same shape as our input, using Gaussian distribution. All ML libraries have that method already build-in. Ok but how exactly that calculation looks like?

We can divide that infidelity equation into 2 or even 3 parts:

@1

We need to calculate attribution using our original input and multiply it by transposed noise vector. This might look simple (and it is) but there is one important thing to notice. Because we’re using our original noise vector if it has some 0 values then attribution values placed on the transposed positions of those values are going to be cleared (multiplication by 0).

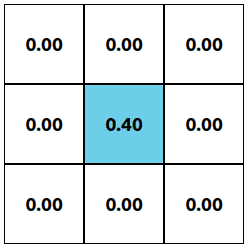

In the case of noise like in Fig. 5 only attribution value from (2,2) (transposed matrix looks exactly the same in this case) position is used for final score calculation.

@2

This part is even simpler because we’re just getting our black-box model output and subtracting value after perturbation from that before perturbation.

@3

This is not exactly a separate part, but we don’t want to have any negative numbers, so we’re just squaring the result.

Demo

Now you know how the calculation looks like and I’ve prepared a simple showcase application where you can play with different noises and check how it affects infidelity or/and sensitivity. Remember that noise values in this example are very large and usually even significant perturbation is a lot smaller than that.

This page is designed to work on desktop resolution, you might expirience difficulty using some of the features depends on your mobile device.

Noise value

0.4Infidelity: 0, Sensitivity: 0

- Masked Input Score

- Class 00%

- Class 10%

- Original Input Score

- Class 00%

- Class 10%

This application is using a model that takes 3x3x1 input image and predicts assignment to one of two classes. This is just a simple model and you shouldn’t bother with its usefulness (it has none). I’ve created it just for the purpose of showing the effect of selected noise on infidelity and sensitivity. It would be uncomfortable to show the same effect using a larger grid.

Conclusions

I’ve presented to you two measures that could be used to quantitatively measure and compare different XAI methods. I’m currently in a process of writing a paper discussing how much those measures are useful and should we use them to compare methods with each other.

If you’re interested in using those methods you can try Captum Library and their implementation of those methods:

References:

- Axiomatic Attribution for Deep Networks, Mukund Sundararajan et al. 2017 arxiv 1703.01365

- Striving for Simplicity: The All Convolutional Net, Jost Tobias Springenberg et al. 2015 arxiv 1412.6806

- On the (In)fidelity and Sensitivity for Explanations, Chih-Kuan Yeh et al. 2019 arxiv 1901.09392

- Grad-CAM: Visual Explanations from Deep Networks via Gradient-based Localization, R. R. Selvaraju et al. 2016 arxiv 1610.02391