What is the Noise Tunnel?

Noise Tunnel [1] is not an attribution method but a technique that improves the accuracy of attribution methods. It combines SmoothGrad [2], SmoothGrad-Square (unpublished version of the SmoothGrad), and VarGrad [3] and works with most of the attribution methods.

Noise Tunnel addresses a problem described in articles XAI Methods - Guided Backpropagation and XAI Methods - Integrated Gradients, where we discussed the problem with ReLU activation function and gradients producing noisy, often irrelevant attributions. Because the partial derivative of the models’ score for a class with respect to the value of the pixel fluctuates, Smilkov et al. [2] thought that adding a Gaussian noise and calculating an average of sampled attributions is going to solve the problem.

Improve methods with Noise Tunnel

SmoothGrad (eq. 1) calculates the attribution () using any available method by providing that method an input with Gaussian noise. It then calculates a mean value from all the samples to reduce the importance of less frequent attributions. The idea is that when adding noise to the input image, important attributions are going to be visible most of the time, and noise might change between attributions.

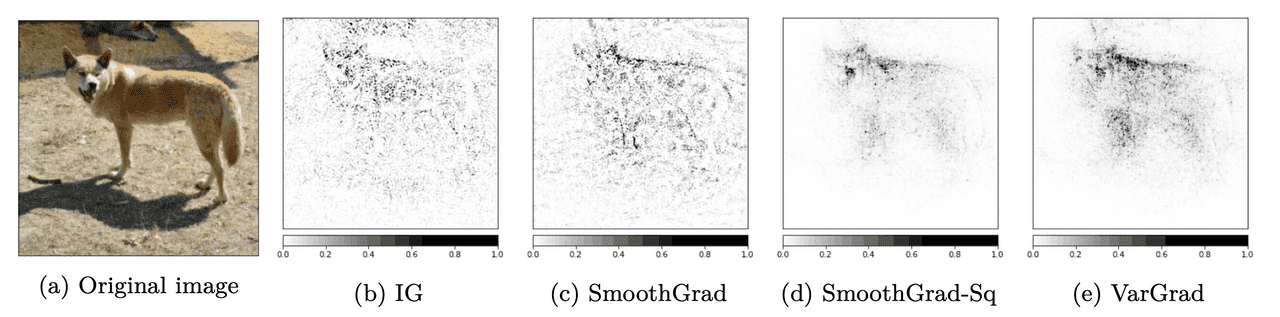

Another version of the SmoothGrad Noise Tunnel is SmoothGrad-Square. It changes only the way that the mean value is calculated by using the mean of squared attributions instead of just attributions (eq. 2). This method usually provides less noisy results (compare Fig. 1c and Fig. 1d) but often removes less important features, which are still valid features.

The third version of Noise Tunnel is a version using VarGrad (see Fig. 1e) which is a variance version of the SmoothGrad and can be defined as Eq. 3, where is a value of SmoothGrad.

When comparing all the methods used in Noise Tunnel, we can see major differences in comparison with the original attribution (see Fig. 1). Using SmoothGrad (Fig. 1c) seems to detect more edges of the input image (in comparison with pure IG attribution in [Fig. 1b]), and that can be interpreted as detecting decision boundary. SmoothGrad-Square (Fig. 1d) and VarGrad (Fig. 1e) are removing a large amount of noise but usually also some of the important features visible on the attribution from SmoothGrad (look on the tail of the dingo).

Drawbacks

Even if the Noise Tunnel method improves the accuracy of the XAI methods it adds a large amount of computational overhead. Every sample generated by the method requires the rerun of the whole XAI method (for that sample). That is a linear increase of computation and to make the method efficient you should use at least 5 generated noise samples (5 times more computation than with just the original XAI method). It might be problematic on slower machines or if the implementation stacks all samples in memory at once (graphic cards can run out of memory).

Further reading

I’ve decided to create a series of articles explaining the most important XAI methods currently used in practice. Here is the main article: XAI Methods - The Introduction

References:

- N. Kokhlikyan, V. Miglani, M. Martin, E. Wang, B. Alsallakh, J. Reynolds, A. Melnikov, N. Kliushkina, C. Araya, S. Yan, i in. Captum: A unified and generic model interpretability library for pytorch. arXiv preprint arXiv:2009.07896, 2020.

- D. Smilkov, N. Thorat, B. Kim, F. Viégas, M. Wattenberg. Smoothgrad: removing noise by adding noise. arXiv preprint arXiv:1706.03825, 2017.

- J. Adebayo, J. Gilmer, M. Muelly, I. Goodfellow, M. Hardt, B. Kim. Sanity checks for saliency maps. arXiv preprint arXiv:1810.03292, 2018.

- A. Khosla, N. Jayadevaprakash, B. Yao, L. Fei-Fei. Stanford dogs dataset. https://www.kaggle.com/jessicali9530/stanford-dogs-dataset, 2019. Accessed: 2021-10-01.