Note! This is just a major overview of the ELM evolution. It doesn’t include all possible versions and tweaks done to ELMs through the years.

What is ELM?

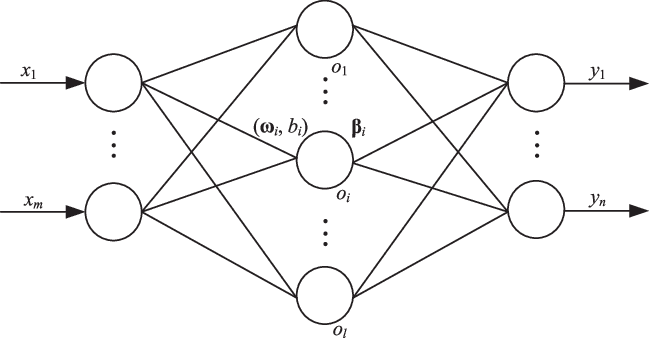

ELM (Extreme Learning Machines) are feedforward neural networks. “Invented” in 2006 by G. Huang and it’s based on the idea of inverse matrix aproximation.

If you’re not familiar with ELMs please check out my article “Introduction to Extreme Learning Machines” first.

When did evolution started?

I-ELM (2006)

After the original publication in 2006, Huang and his associates published another paper on a different type of ELMs called I-ELM (Incremental ELM). As the name says, I-ELM is an incremental version of the standard ELM network. Idea of I-ELM is quite simple:

Define max number of hidden nodes L and expected training accuracy Starting from (l is a number of current hidden nodes):

- Increment

- Initialize weights and bias of the newly added hidden neuron randomly (do not reinitialize already existing neurons)

- Calculate output vector

- Calculate weight vector

- Calculate error after adding node

- Check if

- If not then increase the number of hidden nodes and repeat the process.

There is a chance that at some point in the process and . At this point, we should repeat the whole process of training and initialization.

The idea of incrementing the size of the network is not new and usually produces better results than setting network size “by hand”. There is one disadvantage which is especially important in terms of ELMs… computation time. If your network happens to be large (let’s say 1000 hidden nodes), in worse cases we have to make 1000 matrix inversions.

If you’re interested in I-ELM, you should know there are many variations of it:

- II-ELM (improved I-ELM)

- CI-ELM (convex I-ELM)

- EI-ELM (enhance I-ELM)

I’m not going to explain every one of them because this article should be just a quick summary and a place to start instead of the whole book about all variations of ELMs. Besides that probably every person reading this is here not by a mistake and know how to find more information about an interesting topic if he/she knows what to look for :P

P-ELM (2008)

After introducing an incremental version of ELM another improvement was to use pruning to achieve the optimal structure of the network. P-ELM (pruned ELM) was introduced in 2008 by Hai-Jun Rong. The algorithm starts with a very large network and removes nodes that are not relevant to predictions. By “not relevant” we mean that node is not taking part in predicting output value (i.e. output value is close to 0). This idea was able to produce smaller classifiers and is mostly suitable for pattern classification.

EM-ELM (2009)

This version of ELM is not a standalone version but an improvement of I-ELM. EM stands for Error-Minimized and allows to add a group of nodes instead of only one. Those nodes are inserted randomly into the network until the error is not below .

Regularized ELM (2009)

Starting in 2009, Zheng studied the stability and generalization performance of ELM. He and his team come up with the idea of adding regularization to the original formula for calculating .

Right now it looks like:

TS-ELM (2010)

Two-stage ELM (TS-ELM) was a proposition to once again minimize network structure. Like the name says, it consists of two stages:

- Applying forward recursive algorithm to choose the hidden nodes from candidates generated randomly in each step. Hidden nodes are added until the stopping criterion is matched.

- Review of an existing structure. Even if we created a network with the minimum number of nodes to match our criterion, some of them might no longer be that useful. In this stage, we’re going to remove unimportant nodes.

KELM (2010)

Kernel-based ELM (KELM) was introduced and uses kernel function instead of . This idea was inspired by SVM and the main kernel function used with ELMs is RBF (Radial Basis Function). KELMs are used to design Deep ELMs.

V-ELM (2012)

Voting-based ELM (V-ELM) was proposed in 2012 to improve performance on classification tasks. Problem was that the standard training process of ELM might not achieve the optimal boundary for classification then adding nodes randomly. Because of that, some samples which are near that boundary might be misclassified. In V-ELM we’re not training just one network but many of them and then, base on the majority voting method, selecting the optimal one.

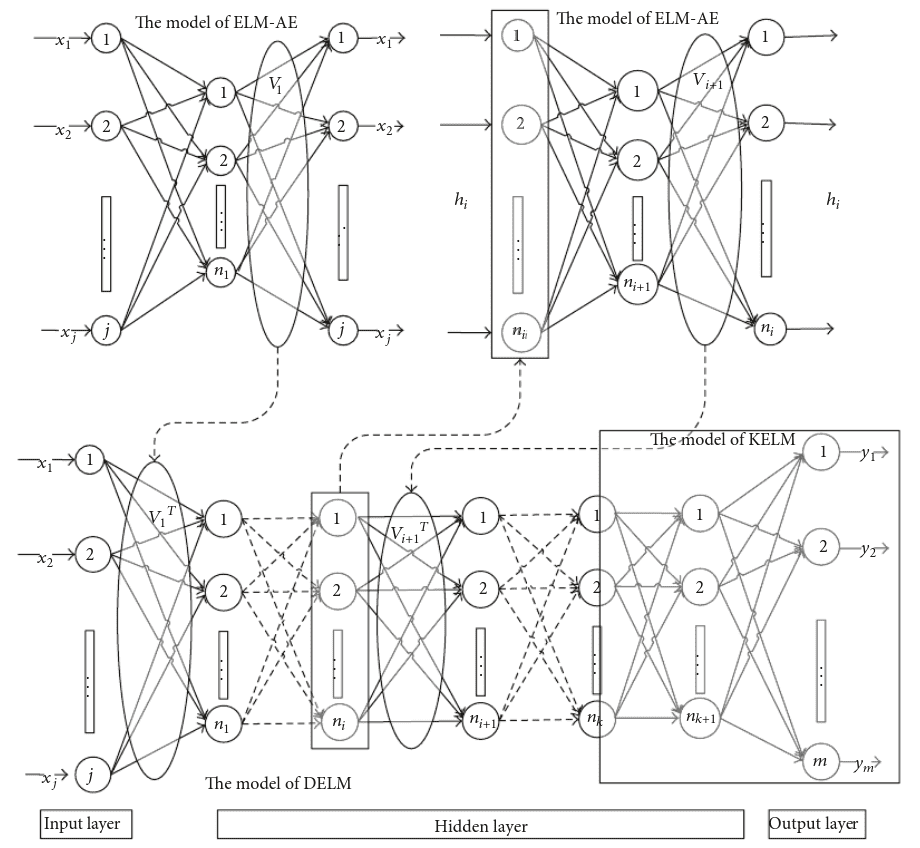

ELM-AE (2013)

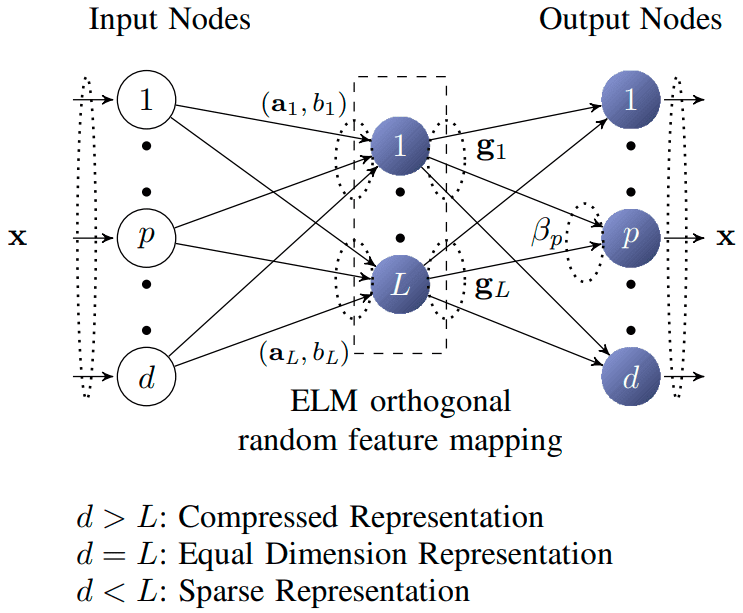

When in 2013 ideas like RBM and autoencoders starting to get popular, Kasnu produces a paper on ELM-AE (ELM Auto-Encoders). The main goal is to be able to reproduce an input vector, as well as standard autoencoders does. Structure of ELM-AE looks the same as standard ELM

There are three types of ELM-AE:

- Compression. Higher-dimensional input space to the lower-dimensional hidden layer (less hidden nodes than input).

- Equal representation. Data dimensionality remains the same (same number of nodes in hidden and input)

- Sparsing. Lower-dimensional input space to the higher-dimensional hidden layer (more hidden nodes than input)

There are two main differences between standard ELMs and ELM-AE. The first one is that ELM-AE is unsupervised. As an output, we’re using the same vectors as input. Second thing is that weights in ELM-AE are orthogonal, the same goes for bias in the hidden layer. This is important because ELM-AE is used to create a deep version of ELMs.

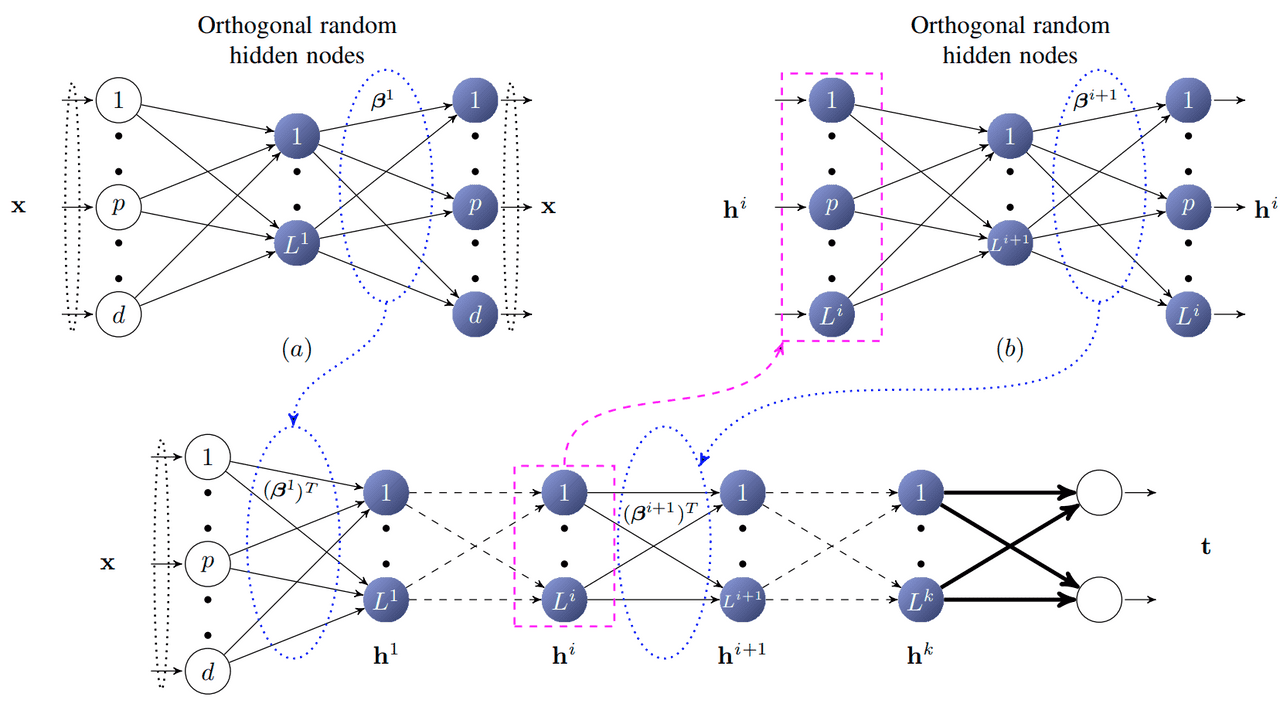

MLELM (2013)

In the same paper (Representational Learning with ELMs for Big Data) Kasnu proposed a version of ELM called Multi-Layer ELM. This idea is based on stacked autoencoders and consists of multiple ELM-AE.

You might ask “Why even bother with creating something similar to stacked autoencoders but with ELMs?“. If we look at how MLELM works we can see that it doesn’t require fine-tuning. That makes it a lot faster to construct than standard autoencoders networks. Like I’ve said, MLELM uses ELM-AE to train the parameters in each layer and removes output layers, so we’re left with only input and hidden layers of the ELM-AEs.

DELM (2015)

Deep ELM is one of the newest (and last major iteration in ELM evolution at the point of writing this article). DELMs are based on the idea of MLELMs with the use of KELM as the output layer.

Conclusion

ELMs were evolving through the years and definitely copying some major ideas from the field of machine learning. Some of those ideas work really great and could be useful when designing real-life models. You should remember that is just a brief summary of what happened in the field of ELM, not a complete review (not even close). It’s highly probable that if you type some prefix before ELM there is already a version of ELM with that prefix :)

References:

- Guang-Bin Huang, Qin-Yu Zhu, Chee-Kheong Siew. “Extreme learning machine: Theory and applications”, 2006 Publication

- Guang-Bin Huang, Lei Chen, Chee-Kheong Siew. “Universal Approximation Using Incremental Constructive Feedforward Networks With Random Hidden Nodes”, 2006 Publication

- Rong, Hai-Jun & Ong, Yew & Tan, Ah-Hwee & Zhu, Zexuan. (2008). A fast pruned-extreme learning machine for classification problem. Neurocomputing. Publication

- Feng, Guorui & Huang, Guang-Bin & Lin, Qingping & Gay, Robert. (2009). Error Minimized Extreme Learning Machine With Growth of Hidden Nodes and Incremental Learning. Publication

- Wanyu, Deng & Zheng, Qinghua & Chen, Lin. (2009). Regularized Extreme Learning Machine. Publication

- Lan, Y., Soh, Y. C., & Huang, G.-B. (2010). Two-stage extreme learning machine for regression. Publication

- Xiao-jian Ding, Xiao-guang Liu, and Xin Xu. 2016. An optimization method of extreme learning machine for regression. Publication

- Cao, Jiuwen & Lin, Zhiping & Huang, Guang-Bin & Liu, Nan. (2012). Voting based extreme learning machine. Publication

- Kasun, Liyanaarachchi & Zhou, Hongming & Huang, Guang-Bin & Vong, Chi-Man. (2013). Representational Learning with ELMs for Big Data. Publication

- Ding, Shifei & Zhang, Nan & Xu, Xinzheng & Guo, Lili & Zhang, Jian. (2015). Deep Extreme Learning Machine and Its Application in EEG Classification. Publication